When it comes to effective on-page SEO factors these 7 points are imperative for your technical SEO checklist. SEO is simply not about keyword research and content. It goes further and SEO marketers must possess some level of technical SEO know-how for SEO marketing in order to gain maximum organic traffic and rank high in search engines.

Here's a 7 point technical SEO checklist for best on page SEO in 2020 where we discuss What Are the Main Components of an SEO Checklist -

Rich Snippets

Rich Snippets are also known as "Rich Results". These are the search results displayed with additional data. You need to have a bit of HTML know-how for this, as the data you want the search engine to caption and highlight, has to be first generated using schema markup tool. The script generated has to be uploaded between the head and body area of the web page. This makes it easy for the search engine to read and understand content on your page. Common Rich Snippet types include reviews, recipes and events. This is a trending on-page SEO factor for SEO marketing in 2020.

Canonicalization

If you have identical content on different links on your website...Canonical tag is a way to tell the search engines to view them as one. By putting canonical tags you are assured that search engines wont term your content as duplicate or repeated as canonical tag is a way of telling search engines that a specific URL represents the master copy of a page. It is indicated with "rel canonical".By using canonical tag you are commanding search engines which version of a URL you want to appear in search results. Canonicalization is again an important on-page SEO factor and has to be on your technical SEO checklist for SEO marketing.

Sitemap

A sitemap is a blueprint of your website that help search engines find, crawl and index all of your website’s content. Sitemaps also tell search engines which pages on your site are most important. Though sitemap does not govern or mandate the search engines to index a certain page...rather it is a way of informing search engines that there's relatable content on the website that you would like to get indexed.

Sitemaps are of 4 main types:

- Normal XML Sitemap

- Video Sitemap

- News Sitemap

- Image Sitemap.

Sitemaps have to be on your technical on-page SEO checklist

301 Redirect

A 301 redirect is a permanent redirect which notifies the search engine that a certain link has been permanently moved to other URL. In most instances, the 301 redirect is the best method for implementing redirects on a website. Serving a 301 indicates to both browsers and search engine bots that the page has moved permanently. Search engines interpret this to mean that not only has the page changed location, but that the content—or an updated version of it—can be found at the new URL.

404 Error

You must be very well aware of 404 Page Not Found error, it indicates that the web page that is attempting to be accessed has been moved or deleted. Most of the pages are left as it is with this error, but from an effective SEO perspective, it is recommended that you make use of redundant pages of your website, if any...in most creative ways possible. Some of the suggestion are, create a funny 404 not found message, display your contact info, include your active website link etc.

Geotags

Geotags are of great help when you want your local business to be discovered online. Geo tagging is a technique that allows you to tag your website, content, RSS feeds, and other things at certain locations. You will be able to use the latitude and longitude to let your viewers know exactly where you are or where something is. You can use other elements other than longitude and latitude to make it more personalized like adding descriptions, titles, names, and more.

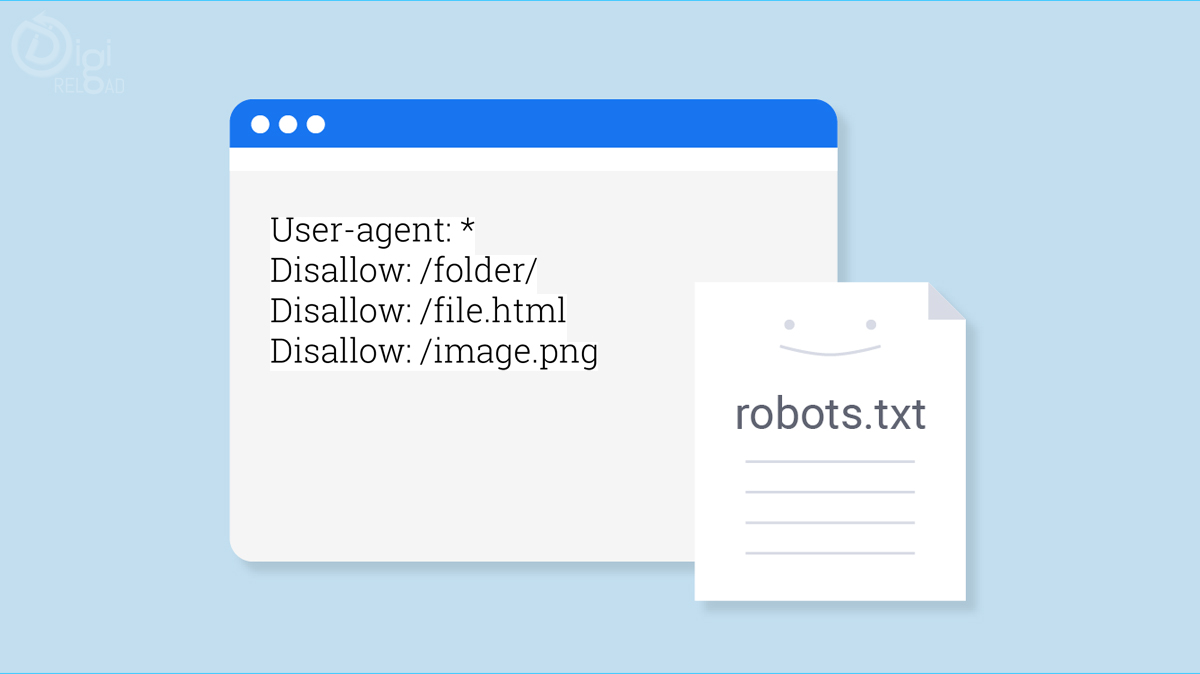

Robots.txt

In simple sense, Robots.txt is an instruction to the web robot as to how to crawl pages on a website. Using robots.txt you instruct the web crawlers, which pages are to be crawled and which pages are to be skipped. These crawl instructions are specified by “disallowing” or “allowing” the behavior of all user agents.

.png)

.png)